Event-Based Architecture Overview

Journey Manager (JM) The transaction engine for the platform. / Event-Based ArchitectureEvent-Based Architecture | System Manager / DevOps | 24.04This feature was updated in 24.04

Manager comes with the Event-Based Architecture (EBA) that allows you to subscribe and publish various events to event topics run on Apache KafkaApache Kafka is a distributed event store and stream-processing platform, which provide a unified, high-throughput, low-latency platform for handling real-time data feeds. For more information, see https://kafka.apache.org/intro.

This makes it possible to decouple some Manager's core functionality so it can be externalized. For example, you may want to replace collaboration jobs workflow engine with an external product of your choice. This, in turn, improves integrations with various 3rd party systems.

The Event-Based Architecture is based on the concept of events, which encourages you to think of events first, not the things and their states. An event is something that happens, for example, data can be an event. In Manager, most of our events are some sort of changes to data, for example, a form submission.

The event-based architecture uses the Inbox and Outbox patterns. We've implemented theme using the Kafka Subscriber and the Kafka Producer, which are Kafka clients that listen to and publish events to different topics in a Kafka's cluster.

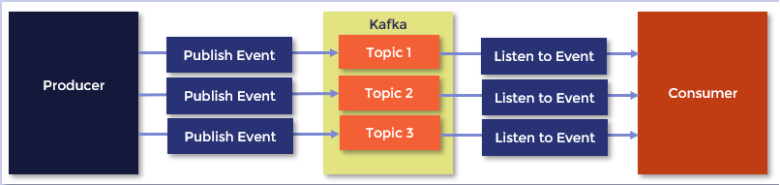

The simplified Kafka event flow is illustrated below:

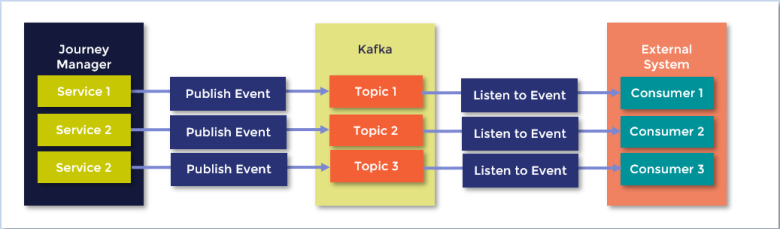

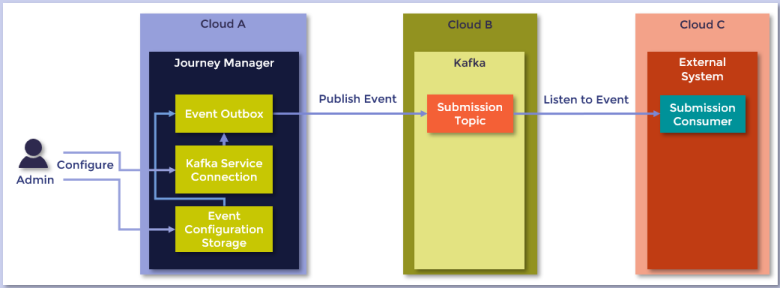

In terms of the event-based architecture, Journey Manager can be both a producer or a consumer of any various events. The diagram below shows Journey Manager as a producer with an external system as a consumer of various events:

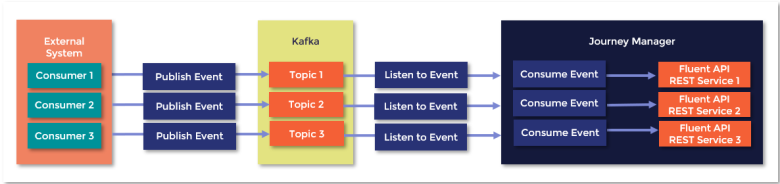

The next diagram below shows Journey Manager as a consumer of events produced by the external system: | 24.10 This feature was introduced in 24.10

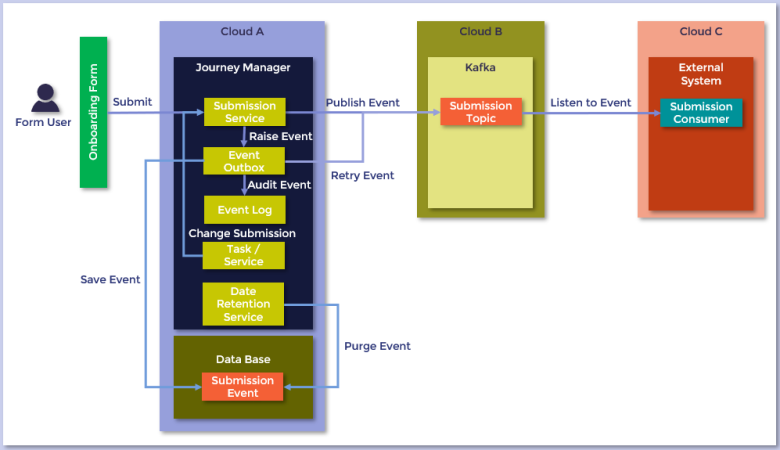

The event publishing mechanism kicks in when submission data is created or modified. For example, when a form user clicks a Submit button on an Onboarding application form, the Submission Service raises an event. A similar event is raised if a service or a task updates or deletes submission data. This is illustrated in a diagram below:

The Submission Service passes the new event to the Event Outbox component to publish it to a Kafka's topic. The Event Outbox also persists it in a local database to ensure the event will be published in case the first publishing effort fails. There are several other components involved to make sure event processing is audited and all data is purged according to your data retention management.

Let's have a detailed look at the sequence of events when a form user submits a form:

- A customer fills in an onboarding form and submits details, as defined in the onboarding process, to Journey Manager's submission service.

- Various events are triggered depending on the custom event configuration. Events are persisted in the database and sent the Event Outbox for publishing.

- Auditing is also performed on the event generation.

- Further changes to the submission may result in additional events being triggered with additional entries persisted in the database Event Outbox

- Journey Manager publishes the event on the topic defined in the Event Configuration. If a connection error occurs, a retry will happen within a defined period of time and number of retries.

- The event is removed from the database on successful delivery to the defined service connection, in this case it's the Kafka service connection.

- Details of the triggered event are stored as a part of the Submission details in Event Log.

- External systems, which have subscribed to the same Kafka topics that are defined in the Event Configuration, are notified when the trigger occurs

For the event-based architecture to work correctly, you need to configure Manager in a way that certain events are published in corresponding topics in the Kafka cluster. This is illustrated in a diagram below:

There's some configuration required to enable publish and subscribe event flows in Manager. Your Administrator or the Cloud Services team can easily perform it by following the configuration steps below. This is a common task that must be done after Manager is installed or upgraded.

Publishing Flow

To start generating events and publishing them to Kafka topics:

- Create and configure a Kafka Service Connection.

- Create a custom Event Configuration Storage service which uses the Kafka Service Connection.

- Enable the Eventing Feature Enabled deployment property to activate the eventing framework, so all triggered events are stored in the Event Outbox.

- The Event Outbox publishes the events to Kafka on a topic as configured in the Kafka Service Connection.

- Any external system, subscribed to Kafka on the topics as defined in the Event Configuration, is notified when an event is triggered and published to Kafka by Manager.

Subscribing Flow

To subscribe to topics and start listening to events from Kafka:

- Create a HTTP Endpoint service connection to point to your Manager REST endpoint.

- Update the Event Inbox Processor Service with this service connection so it will be used to execute REST API calls.

- Create a Kafka Service Connection to connect to a Kafka service with specified topics.

- Create an Event Listener Configuration Service which uses the Kafka Service Connection to subscribe to these topics.

- Check the service is running and listening to events.

- The Event Inbox Processor consumes events that are added to its queue by Event Listener Configuration services. and executes REST API calls using properties defined in each topic.

- An external system, which published this topic to Kafka, is notified when an event is consumed and expects the REST API has been made.

Next, learn about the event definition.